Where Meta's top AI scientists studied and why it matters. (AI generated image used for representational purposes)

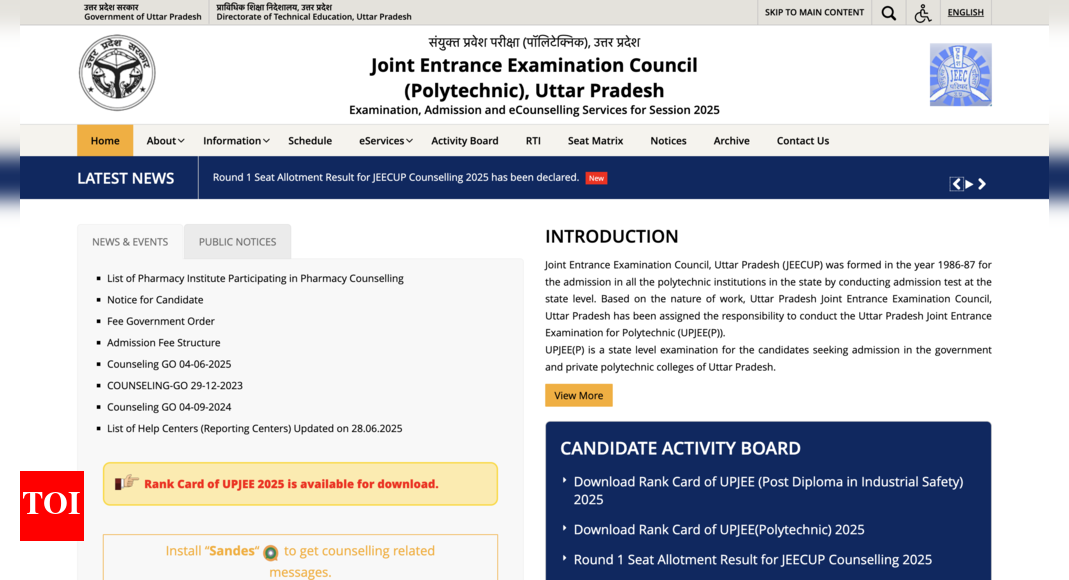

As the race for Artificial General Intelligence (AGI) intensifies, Mark Zuckerberg's Meta has launched a bold, founder-led moonshot: the Meta Superintelligence Lab (MSL). This elite group of technologists—poached from DeepMind, OpenAI, Anthropic and Google—represents some of the sharpest minds in AI today.

The MSL is powered not just by capital and ambition, but by a team of formidable researchers whose educational journeys span continents and disciplines. Their academic backgrounds reveal much about the global talent ecosystem shaping the future of artificial general intelligence (AGI).Meta's recent hiring spree—snagging eleven standout researchers from the likes of DeepMind, OpenAI, Google and Anthropic—reinforces that high-impact AI today emerges from a fusion of rigorous theoretical grounding and interdisciplinary exposure.

These are individuals whose universities and research environments have shaped breakthrough thinking in large language models (LLMs), multimodal reasoning and AI safety.Two themes emerge when examining where these scientists studied. First, most began their journey at world-class institutions in China or India before moving westward for graduate research or tech leadership. Second, the depth and range of their studies—spanning reasoning, speech integration, image generation, and AI alignment—reveal the interdisciplinary demands of building truly intelligent systems.

Jack Rae: Grounding in CMU and UCLJack Rae, recruited from DeepMind for his work on LLMs and long-term memory, studied at Carnegie Mellon University and University College London. Both institutions are renowned for AI and machine learning research. Rae's academic training in computing and cognitive science equipped him to develop memory-augmented neural architectures that embedded practical reasoning into LLMs—capabilities later adopted in models like Gemini.Pei Sun: Structured reasoning from Tsinghua to CMUPei Sun, also from DeepMind, earned his undergraduate degree from Tsinghua University before moving to Carnegie Mellon University for graduate study. His rigorous grounding in maths and structured reasoning contributed directly to Google's Gemini project focused on logical reasoning and problem solving.Trapit Bansal: IIT Kanpur to UMass AmherstTrapit Bansal, formerly at OpenAI, holds a BTech in Computer Science from IIT Kanpur and completed graduate studies at UMass Amherst.

He specialised in “chain-of-thought” prompting and alignment, helping GPT-4 generate multi-step reasoning—an innovation that significantly advanced LLM coherence and reliability. He completed his BSc–MSc dual degree in Mathematics and Statistics at the Indian Institute of Technology (IIT) Kanpur, graduating in 2012.

Post-graduation, he worked briefly at Accenture Management Consulting (2012) and later as a Research Assistant at the Indian Institute of Science (IISc), Bengaluru (2013–2015). Bansal then moved to the University of Massachusetts Amherst, where he earned his MS in Computer Science in 2019 and PhD in 2021. During his doctoral years, he interned at Facebook (2016), OpenAI (2017), Google Research (2018), and Microsoft Research Montréal (2020)—building a rare interdisciplinary perspective across industry labs.He joined OpenAI in January 2022 as a Member of Technical Staff, contributing to GPT-4 and leading development on the internal “o1” reasoning model.

In June 2025, he transitioned to Meta Superintelligence Lab, one of the most high-profile hires in recent memory.Shengjia Zhao: Bridging Tsinghua and StanfordShengjia Zhao, co-creator of both ChatGPT and GPT-4, also began at Tsinghua University and later joined Stanford for his PhD. His dual focus on model performance and safety helped lay the groundwork for GPT-4 as a reliable, multi-modal AI.Ji Lin: Optimisation from Tsinghua to MITJi Lin, an optimisation specialist contributing to GPT-4 scaling, studied at Tsinghua University before moving on to MIT.

His expertise in model compression and efficiency is vital for making giant AI models manageable and deployable.Shuchao Bi: Speech-text expert at Zhejiang and UC BerkeleyShuchao Bi earned his undergraduate degree at Zhejiang University in China before pursuing graduate education at UC Berkeley. His work on speech-to-text integration informs vital voice capabilities in GPT-4 and other multi-modal systems.Jiahui Yu: Gemini vision from USTC to UIUCJiahui Yu, whose expertise bridges both OpenAI and Google in Gemini vision and GPT-4 multimodal design, studied at the University of Science and Technology of China (USTC) before heading to UIUC—renowned for computer vision and graphics research.Hongyu Ren: Safety education from Peking to StanfordHongyu Ren, an authority on robustness and safety in LLMs, earned his undergraduate degree at Peking University and completed graduate studies at Stanford, blending theoretical rigour with practical insight into model alignment.Huiwen Chang: Image generation from Tsinghua and PrincetonHuiwen Chang, who worked on Muse and MaskIT systems while at Google, received his BEng from Tsinghua University and pursued graduate work at Princeton, where he focused on next-generation image generation.Johan Schalkwyk: Voice AI from Pretoria UniversityVoice-AI veteran Johan Schalkwyk led Google Voice Search. He studied at University of Pretoria in South Africa, developing foundational technologies in speech recognition long before transitioning to Sesame AI and eventually MSL.Joel Pobar: Infrastructure from QUTJoel Pobar, formerly with Anthropic and now part of Meta's core team, studied at Queensland University of Technology (QUT) in Australia.

His expertise in large AI infrastructure and PyTorch optimisation rounds out the team's ability to build at scale.Why it mattersThis constellation of academic backgrounds reveals key patterns. First, many team members started at elite institutions in China and India—Tsinghua, Peking, USTC, IIT Kanpur—before completing advanced study in North America or Europe. Such academic migration fosters the cross-pollination of ideas and technologies vital to AGI progress.Second, the diversity in specialisations—from chain-of-thought reasoning and speech-text fusion to alignment and infrastructure optimisation—reflects a holistic approach to AGI development. No single breakthrough will suffice; each educational trajectory contributes a crucial piece of the intelligence puzzle.Lastly, these researchers underscore the importance of rigorous mathematical and computational foundations.

Their trajectories, marked by early excellence in computing and prime PhD programmes, highlight that AGI talent is born of sustained academic commitment—not overnight spark. For today's students, this means investing in strong undergrad programmes, targeting interdisciplinary research opportunities, and seeking environments that encourage open, foundational exploration.

In aggregate, the educational pedigree of Meta's superintelligence team isn't mere résumé-padding. It's the backbone of a strategy to crack AGI—a challenge that demands not just technical acumen, but a global, theory-driven, collaborative mindset.

7 hours ago

54

7 hours ago

54

English (US)

English (US)